Google DeepMind is launching Gemini 2.5 Deep Think, its most sophisticated AI reasoning model to date, capable of evaluating multiple ideas in parallel to arrive at the best possible answer. The model will be available starting Friday to subscribers of Google’s $250-per-month Ultra plan via the Gemini app.

First introduced at Google I/O in May 2025, Gemini 2.5 Deep Think is Google’s first publicly available multi-agent AI system. Unlike conventional models that respond using a single AI agent, this system spawns multiple agents to explore different reasoning paths simultaneously; a computationally intensive process, but one that significantly improves performance and depth of answers.

A variant of this model recently helped Google clinch a gold medal at the International Math Olympiad (IMO), and now, the company is making that version available to select mathematicians and researchers. According to Google, this specialized IMO model can take hours to process complex problems, far beyond the near-instant responses expected from typical consumer AI tools. The company hopes it will become a valuable asset in academic and scientific research.

Google says Gemini 2.5 Deep Think represents a major leap from the version showcased earlier this year, citing newly developed reinforcement learning techniques that improve how the model chooses and evaluates reasoning paths. The company envisions use cases in areas requiring creativity, strategic thinking, and step-by-step problem-solving from research to web development.

Related: Tesla signs $16.5B Samsung Chip Deal and It Is not Just for Cars

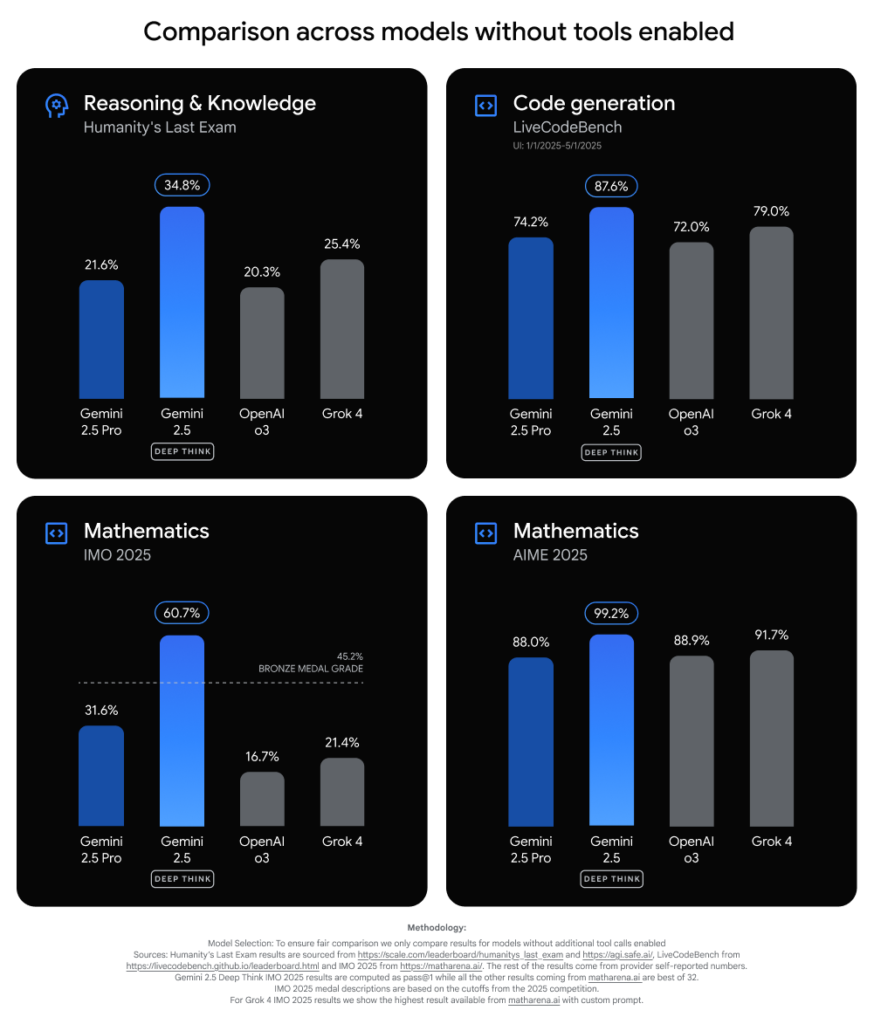

Performance-wise, Deep Think is delivering standout results. It scored 34.8% on Humanity’s Last Exam (HLE), a rigorous benchmark covering thousands of crowd-generated questions across disciplines like math, humanities, and science. That’s ahead of xAI’s Grok 4 (25.4%) and OpenAI’s o3 (20.3%).

On LiveCodeBench6, a test of advanced programming ability, Gemini 2.5 Deep Think achieved 87.6%, outperforming Grok 4 (79%) and OpenAI’s o3 (72%). The model also integrates natively with tools like Google Search and code execution environments, and it can generate longer, more refined responses than typical LLMs; particularly in tasks involving web design and development.

Google notes that multi-agent systems are becoming a common trend among leading AI labs. xAI recently unveiled Grok 4 Heavy, its own multi-agent model with top-tier benchmark scores, while OpenAI and Anthropic have both confirmed they’re building similar systems for math competitions and research applications.

However, the high compute demands of multi-agent models mean they’re unlikely to be widely available soon. Like xAI, Google is keeping Gemini 2.5 Deep Think behind its premium paywall, at least for now.

In the coming weeks, Google plans to expand access via the Gemini API to a select group of developers and enterprise testers. The goal: to see how businesses, researchers, and developers might use multi-agent AI in real-world applications.